Evaluate C-CDA Documents for Structure, Vocabulary, and Compliance

The C-CDA Scorecard automatically grades XML-based clinical documents against conformance and vocabulary standards. Upload a C-CDA file, run the scorecard, and receive a detailed quality assessment with actionable insights.

No setup. No code. Just fast, reliable validation.

Start Using the C-CDA Scorecard

Instantly validate and score your C-CDA documents — free and ready to use.

Why C-CDA Quality Scoring Matters

Clinical documents are only as useful as they are complete and conformant. Inconsistent formatting, invalid codes, and incomplete sections can prevent CCDs from being used effectively in interoperability, supplemental data submissions, or digital quality measurement.

Manual document review is time-intensive, inconsistent, and not scalable. The C-CDA Scorecard automates this process, helping teams understand where their documents fail and how to fix them.

The Solution - Automated Quality Scoring for C-CDA Files

Score, Analyze, and Improve C-CDA Files with Confidence

Quick Upload and Format Validation

Upload a single XML-formatted C-CDA file using the drag-and-drop interface. All files are validated for format before processing.

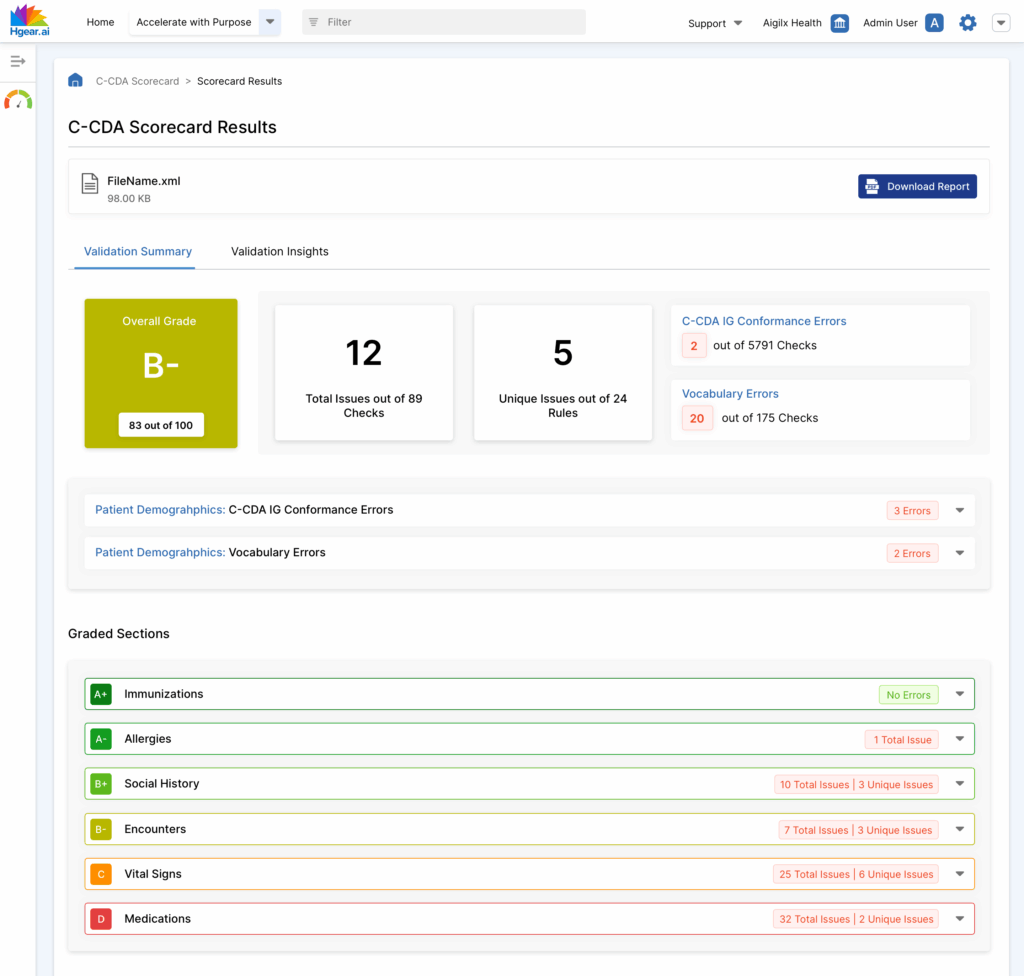

Section-Level Grading

See individual grades for each component such as:

- Patient Demographics

- Encounters

- Allergies

- Medications

- Problems

- Immunizations

Automated Document Scoring

Each document is evaluated and assigned a letter grade based on rule-based scoring across two models:

- C-CDA IG Conformance (structure and template compliance)

- Vocabulary Conformance (terminology accuracy)

Detailed Rule Descriptions

For each issue, view the violated rule, description, expected value, and XML snippet with line reference. This helps developers and QA teams fix errors quickly.

Error Categorization and Severity

The scorecard breaks down total errors by:

- Number of failed rules

- Type of rule violated (IG or vocabulary)

- Line number and section within the XML document

Score Tracking and History

Grades, errors, and timestamps are stored in the audit log, providing a traceable view of your file quality over time.

Key Capabilities

API Capabilities

Use the C-CDA Scorecard API to scale quality analysis across systems and users:

-

Upload and score documents programmatically

-

Retrieve grading reports with issue breakdowns

-

Query audit history and scoring results by user or date

-

Export issue summaries for workflow automation

Trial users can access full API documentation.

Security and Trust

Your data is always protected.

-

No uploaded files are stored or retained

-

HTTPS encryption is enforced for all connections

-

Each session is fully isolated and temporary

Important: This trial environment is for evaluation only. Please upload only synthetic or de-identified C-CDA files.

Improve Document Quality Before You Submit

-

Quickly identify gaps in structure and terminology

-

Improve data quality for HEDIS, NCQA, and FHIR-readiness

-

Provide clear guidance to teams responsible for document generation

-

Share error logs and scores with downstream partners or vendors

-

Build confidence in supplemental data submissions

Get started instantly — no installation, no setup, no dependencies

Frequently Asked Questions

What formats are supported?

C-CDA documents in .xml format. This includes CCDs and other common clinical summaries.

How detailed is the error report?

Each issue is linked to the failed rule, XML path, and line number, with specific descriptions for easier remediation.

Can I track multiple submissions over time?

Yes. Use the audit report to see a history of uploaded files, scores, statuses, and users.

Can I use this with PHI in production?

Not during trial use. Please only upload synthetic or de-identified data.

Begin Your Data Quality Journey Today

Score, Fix, and Improve C-CDA Quality Automatically

Make your clinical documents more complete, accurate, and standards-ready using a proven scoring tool.

Sign Up to Use the C-CDA Scorecard

Access the free C-CDA Scorecard to evaluate document quality, identify compliance gaps, and improve interoperability readiness.